BPMN

BPMN (Business Process Model and Notation)

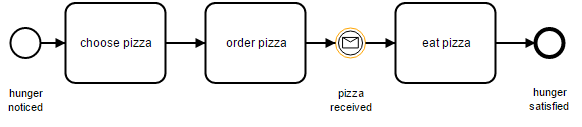

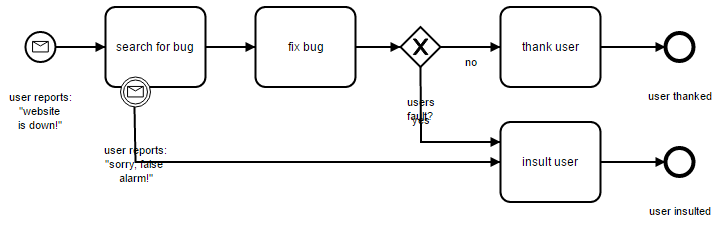

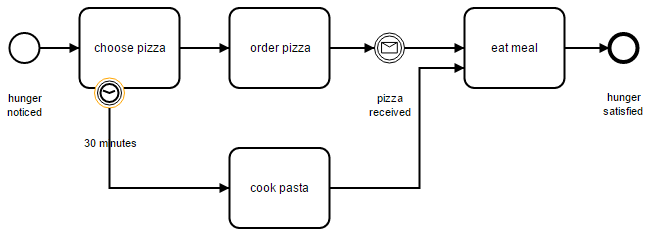

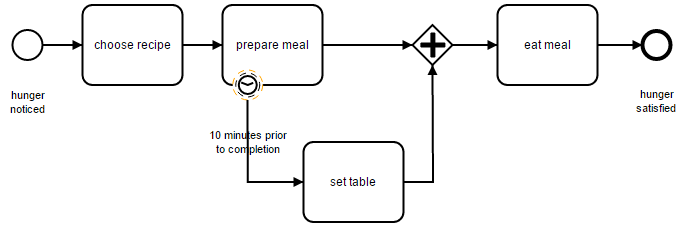

Business Process Model and Notation (BPMN) is a standard for business process modeling that provides a graphical notation for specifying business processes in a Business Process Diagram (BPD), based on a flowcharting technique very similar to activity diagrams from Unified Modeling Language (UML). The objective of BPMN is to support business process management, for both technical users and business users, by providing a notation that is intuitive to business users, yet able to represent complex process semantics. The BPMN specification also provides a mapping between the graphics of the notation and the underlying constructs of execution languages.

The primary goal of BPMN is to provide a standard notation readily understandable by all business stakeholders. These include the business analysts who create and refine the processes, the technical developers responsible for implementing them, and the business managers who monitor and manage them. Consequently, BPMN serves as a common language, bridging the communication gap that frequently occurs between business process design and implementation.

This documentation will not explain how BPMN works or is modeled but shows how it's connected to the Ceap plataform and the concepts that. A quick view about how BPMN works can be viewed here.

Engine

The engine used by Ceap Plataform is Camunda, it parses and executes the BPMN file.

A recommended tutorial about Camunda BPMN.

Process Engine Documentation about Camunda is here

There's is an API (br.com.me.ceap.dynamic.extension.service.ProcessService) to access the Process, the Camunda Engine is not directly accessible.

BPMN File (Process Definition)

A process definition defines the structure of a process. You could say that the process definition is the process. Camunda BPM uses BPMN 2.0 as its primary modeling language for modeling process definitions.

Each BPMN file is associated with a Document Type, a Process Instance doesn't exist without a Document of configured Document Type (MetaDocument).

ProcessId property is the key of a process (like a primary key).

Camunda BPMN Documentation Reference

Process Instance

A process instance is an individual execution of a process definition. The relation of the process instance to the process definition is the same as the relation between Object and Class in Object Oriented Programming (the process instance playing the role of the object and the process definition playing the role of the class in this analogy).

The process engine is responsible for creating process instances and managing their state. If you start a process instance which contains a wait state, for example a user task, the process engine must make sure that the state of the process instance is captured and stored inside a database until the wait state is left (the user task is completed).

A process instance must have a MetaDocument to be created. A MetaDocument can be associated with just one active process instance at a time with the same process id, otherwise, a unique key for process instance is: MetaDocument, Active Status, ProcessId.

Start a Process Instance

Map<String, Object> variables = new HashMap<String,Object>();

UserProcess userProcess = processService.getProcess("br.com.me.process")

UserProcessInstance userProcessInstance = processService.startProcess(userProcess, metaDocument, variables);

Process variables are available to all tasks in a process instance and are automatically persisted to the database in case the process instance reaches a wait state.

Query for Process Instances

List<UserProcessInstance> processInstances = processService.getProcessInstance("br.com.me.process", metaDocument, ProcessInstanceStatus.ACTIVE);

or directly an active process instance:

UserProcessInstance processInstance = processService.getProcessInstance("br.com.me.process", metaDocument);

Interact With a Process Instance

Once you have performed a query for a particular process instance (or a list of process instances), you may want to interact with it. There are multiple possibilities to interact with a process instance, most prominently:

- Triggering it (make it continue execution):

- Through a Message Event

- Suspending it:

- Using the processService.suspendProcessInstance(...) method.

If your process uses at least one User Task, you can also interact with the process instance using the TaskService API.

Suspend Process Instances

Suspending a process instance is helpful, if you want ensure that it is not executed any further. For example, if process variables are in an undesired state, you can suspend the instance and change the variables safely.

In detail, suspension means to disallow all actions that change token state (i.e., the activities that are currently executed) of the instance. For example, it is not possible to signal an event or complete a user task for a suspended process instance, as these actions will continue the process instance execution subsequently. Nevertheless, actions like setting or removing variables are still allowed, as they do not change the token state.

Also, when suspending a process instance, all tasks belonging to it will be suspended. Therefore, it will no longer be possible to invoke actions that have effects on the task’s lifecycle (i.e., user assignment, task delegation, task completion, …). However, any actions not touching the lifecycle like setting variables or adding comments will still be allowed.

A process instance can be suspended by using the suspendProcessInstance(...) method of the ProcessService. Similarly it can be reactivated again using activateProcessInstance(...).

Jobs and Job Definitions

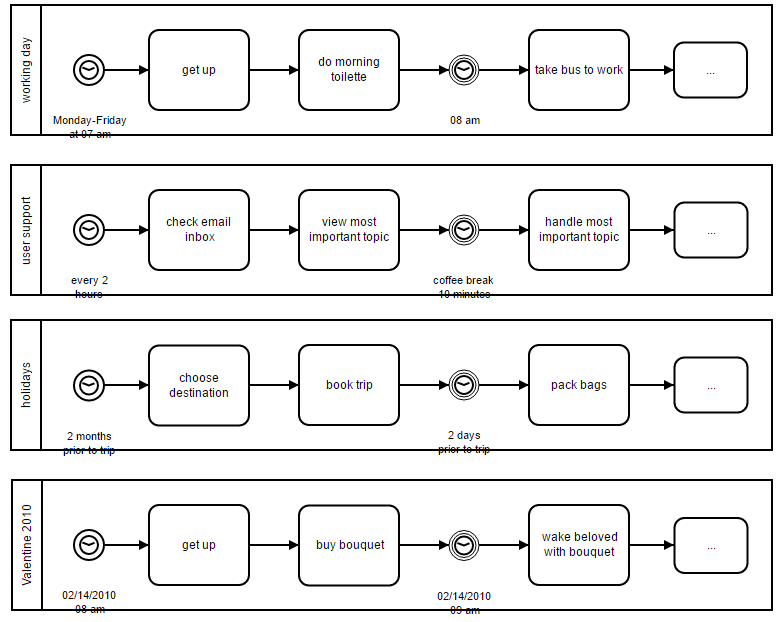

The Camunda process engine includes a component named the Job Executor. The Job Executor is a scheduling component, responsible for performing asynchronous background work. Consider the example of a Timer Event: whenever the process engine reaches the timer event, it will stop execution, persist the current state to the database and create a job to resume execution in the future. A job has a due date which is calculated using the timer expression provided in the BPMN XML.

When a process is deployed, the process engine creates a Job Definition for each activity in the process which will create jobs at runtime. This allows you to query information about timers and asynchronous continuations in your processes.

Job Executor for a particular Process Instance be viewed in the Process History Page.

Executions

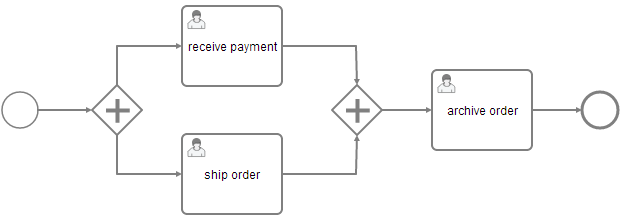

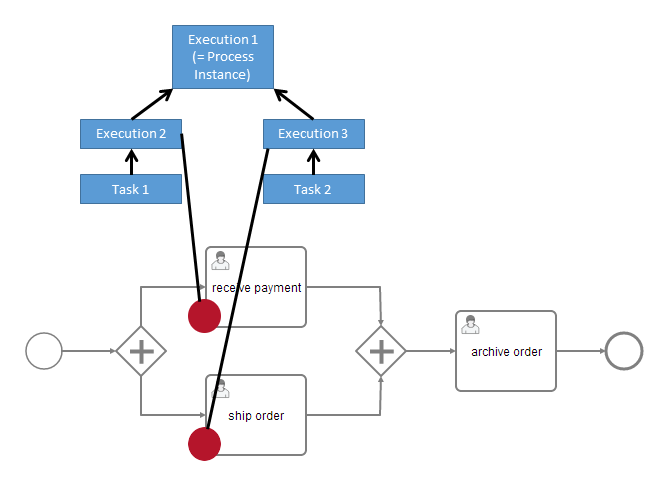

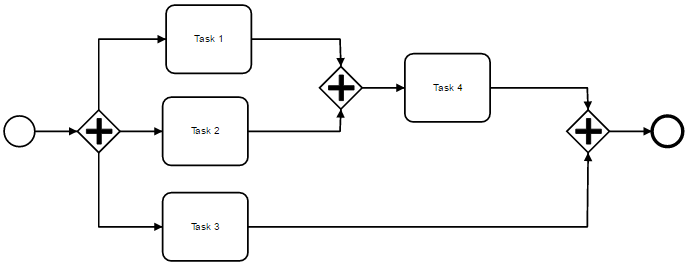

If your process instance contains multiple execution paths (like for instance after a parallel gateway, you must be able to differentiate the currently active paths inside the process instance. In the following example, two user tasks receive payment and ship order can be active at the same time.

Internally, the process engine creates two concurrent executions inside the process instance, one for each concurrent path of execution. Executions are also created for scopes, for example if the process engine reaches a Embedded Sub Process or in case of Multi Instance.

Executions are hierarchical and all executions inside a process instance span a tree, the process instance being the root-node in the tree. Note: the process instance itself is an execution. Executions are variable scopes, meaning that dynamic data can be associated with them.

Process Variables

Variables can be used to add data to process runtime state or, more particular, variable scopes. Various API methods that change the state of these entities allow updating of the attached variables. In general, a variable consists of a name and a value. The name is used for identification across process constructs. For example, if one activity sets a variable named var, a follow-up activity can access it by using this name. The value of a variable is a Java object.

Variable Scopes and Variable Visibility

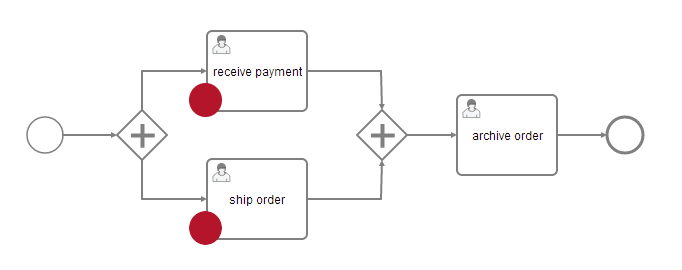

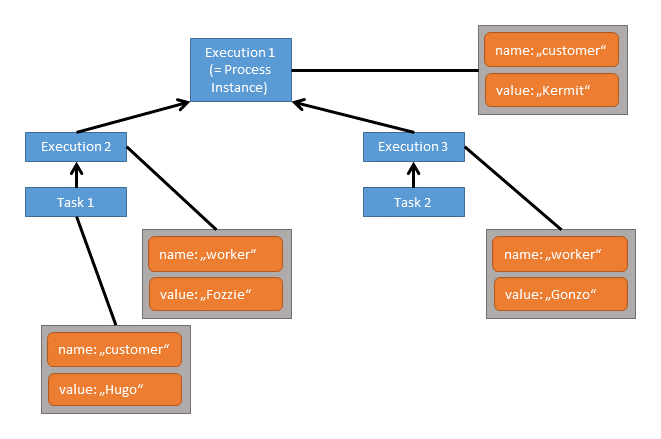

All entities that can have variables are called variable scopes. These are executions (which include process instances) and tasks. As described in the Executions section, the runtime state of a process instance is represented by a tree of executions. Consider the following process model where the red dots mark active tasks:

The runtime structure of this process is as follows:

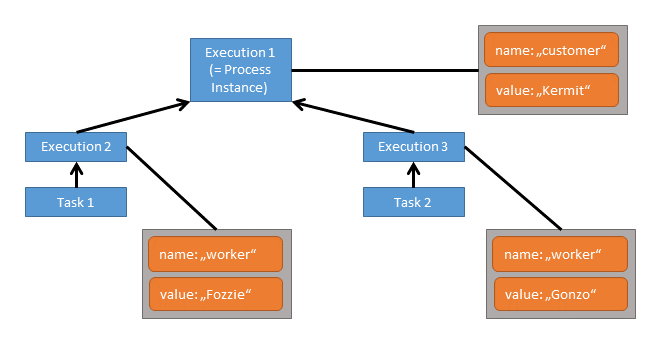

here is a process instance with two child executions, each of which has created a task. All these five entities are variable scopes and the arrows mark a parent-child relationship. A variable that is defined on a parent scope is accessible in every child scope unless a child scope defines a variable of the same name. The other way around, child variables are not accessible from a parent scope. Variables that are directly attached to the scope in question are called local variables. Consider the following assignment of variables to scopes:

In this case, when working on Task 1 the variables worker and customer are accessible. Note that due to the structure of scopes, the variable worker can be defined twice, so that Task 1 accesses a different worker variable than Task 2. However, both share the variable customer which means that if that variable is updated by one of the tasks, this change is also visible to the other.

Both tasks can access two variables each while none of these is a local variable. All three executions have one local variable each.

Now let’s say, we set a local variable customer on Task 1:

While two variables named customer and worker can still be accessed from Task 1, the customer variable on Execution 1 is hidden, so the accessible customer variable is the local variable of Task 1.

In general, variables are accessible in the following cases:

- Instantiating processes

- Delivering messages

- Task lifecycle transitions, such as completion or resolution

- Setting/getting variables from outside

- Setting/getting variables in a Delegate

- Expressions in the process model

- Scripts in the process model

- (Historic) Variable queries

System Variables

Ceap automatically creates system variables with process information:

public class ProcessVariable {

/**

* Parameter containing the creator (UserHumanBeing) of the process instance

*/

public static final String PARAMETER_ACTOR_OWNER = "system.ownerActor";

/**

* Parameter containing the current logged UserHumanBeing (who triggered the last user action)

*/

public static final String PARAMETER_ACTOR_CURRENT = "system.currentActor";

/**

* Parameter containing a Process.id

*/

public static final String PARAMETER_PROCESS_ID = "system.processId";

/**

* Parameter containing a Document.id

*/

public static final String PARAMETER_DOCUMENT_ID = "system.documentId";

/**

* Parameter containing a Document.identification that is the MetaDocument.id

*/

public static final String PARAMETER_META_DOCUMENT_ID = "system.metaDocumentId";

/**

* Parameter containing a ProcessDocument.id

*/

public static final String PARAMETER_PROCESS_DOCUMENT_ID = "system.processDocumentId";

/**

* Parameter containing a customRoot.id

*/

public static final String PARAMETER_CUSTOM_ROOT_ID = "system.customRootId";

}

MetaDocument Context

Each execution has a MetaDocument in its context, which is used to pass a MetaDocument in Handlers, for example, the process instance (which is also an execution) has a MetaDocument of a Document Type configured in Process Definition.

The User Task, Call Activity and Sub Process can optionally have their own MetaDocument Context that will be different just inside of their executions through extensions:

- documentTypeName: Document Type Name

- metaDocumentIdVariable: Name of a Process Variable containing the MetaDocument.id.

Expression Language

Camunda BPM supports Unified Expression Language (EL), specified as part of the JSP 2.1 standard (JSR-245). To do so, it uses the open source JUEL implementation. To get more general information about the usage of Expression Language, please read the official documentation. Especially the provided examples give a good overview of the syntax of expressions.

Within Camunda BPM, EL can be used in many circumstances to evaluate small script-like expressions. The following table provides an overview of the BPMN elements which support usage of EL.

| BPMN element | EL support |

|---|---|

| Service Task, Business Rule Task, Send Task, Message Intermediate Throwing Event, Message End Event, Execution Listener and Task Listener | Expression language as delegation code |

| Sequence Flows, Conditional Events | Expression language as condition expression |

| All Tasks, All Events, Transaction, Subprocess and Connector | Expression language inside an inputOutput parameter mapping |

| Different Elements | Expression language as the value of an attribute or element |

| All Flow Nodes, Process Definition | Expression language to determine the priority of a job |

Usage of Expression Language

Delegation Code

Besides Java code, Camunda BPM also supports the evaluation of expressions as delegation code. For general information about delegation code, see the corresponding section.

Two types of expressions are currently supported: camunda:expression and camunda:delegateExpression.

With camunda:expression it is possible to evaluate a value expression or to invoke a method expression. You can use special variables which are available inside an expression or Spring and CDI beans.

<process id="process">

<extensionElements>

<!-- execution listener which uses an expression to set a process variable -->

<camunda:executionListener event="start" expression="${execution.setVariable('test', 'foo')}" />

</extensionElements>

<!-- ... -->

<userTask id="userTask">

<extensionElements>

<!-- task listener which calls a method of a bean with current task as parameter -->

<camunda:taskListener event="complete" expression="${myBean.taskDone(task)}" />

</extensionElements>

</userTask>

<!-- ... -->

<!-- service task which evaluates an expression and saves it in a result variable -->

<serviceTask id="serviceTask"

camunda:expression="${myBean.ready}" camunda:resultVariable="myVar" />

<!-- ... -->

</process>

The attribute camunda:delegateExpression is used for expressions which evaluate to a delegate object. This delegate object must implement either the JavaDelegate or ActivityBehavior interface.

<!-- service task which calls a bean implementing the JavaDelegate interface -->

<serviceTask id="task1" camunda:delegateExpression="${myBean}" />

<!-- service task which calls a method which returns delegate object -->

<serviceTask id="task2" camunda:delegateExpression="${myBean.createDelegate()}" />

Conditions

To use conditional sequence flows or conditional events, expression language is usually used. For conditional sequence flows, a conditionExpression element of a sequence flow has to be used. For conditional events, a condition element of a conditional event has to be used. Both are of the type tFormalExpression. The text content of the element is the expression to be evaluated.

The following example shows usage of expression language as condition of a sequence flow:

<sequenceFlow>

<conditionExpression xsi:type="tFormalExpression">

${test == 'foo'}

</conditionExpression>

</sequenceFlow>

For usage of expression language on conditional events, see the following example:

<conditionalEventDefinition>

<condition type="tFormalExpression">${var1 == 1}</condition>

</conditionalEventDefinition>

inputOutput Parameters

With the Camunda inputOutput extension element you can map an inputParameter or outputParameter with expression language.

Inside the expression some special variables are available which enable the access of the current context.

The following example shows an inputParameter which uses expression language to call a method of a bean.

<serviceTask id="task" camunda:class="org.camunda.bpm.example.SumDelegate">

<extensionElements>

<camunda:inputOutput>

<camunda:inputParameter name="x">

${myBean.calculateX()}

</camunda:inputParameter>

</camunda:inputOutput>

</extensionElements>

</serviceTask>

Availability of Variables and Functions Inside Expression Language

Process Variables

All process variables of the current scope are directly available inside an expression. So a conditional sequence flow can directly check a variable value:

<sequenceFlow>

<conditionExpression xsi:type="tFormalExpression">

${test == 'start'}

</conditionExpression>

</sequenceFlow>

Internal Context Variables

Depending on the current execution context, special built-in context variables are available while evaluating expressions:

| Variable | Java Type | Context |

|---|---|---|

| execution | DelegateExecution | Available in a BPMN execution context like a service task, execution listener or sequence flow. |

| task | DelegateTask | Available in a task context like a task listener. |

The following example shows an expression which sets the variable test to the current event name of an execution listener.

<camunda:executionListener event="start"

expression="${execution.setVariable('test', execution.eventName)}" />

External Context Variables With Spring

If the process engine is integrated with Spring or CDI, it is possible to access Spring and CDI beans inside of expressions. Please see the corresponding sections for Spring and CDI for more information. The following example shows the usage of a bean which implements the JavaDelegate interface as delegateExecution.

<serviceTask id="task1" camunda:delegateExpression="${myBean}" />

With the expression attribute any method of a bean can be called.

<serviceTask id="task2" camunda:expression="${myBean.myMethod(execution)}" />

Internal Context Functions

Special built-in context functions are available while evaluating expressions:

| Function | Return Type | Description |

|---|---|---|

| document:current() | MetaDocument | Return the document associated with Process Instance. Any field of the document can be accessed. |

| document:changeCurrent('fieldName', value) | None | Change the field of the document associated with Process Instance. |

| document:currentSubDocuments('documentTypeName') | MetaDocument List | Return the sub documents list associated with Process Instance. |

| dateTime() | DateTime | Returns a Joda-Time DateTime object of the current date. Please see the Joda-Time documentation for all available functions. |

| utils:extractFieldFromList('pathToList', 'fieldName') | List | Extract a field from a list to return a list with just the field value. |

| utils:optionalActor('fieldPath') | Object | Null-safe function for task actor expressions to get the field value or an empty list if that value is null or empty. |

The following example sets the due date of a user task to the date 3 days after the creation of the task.

<userTask id="theTask" name="Important task" camunda:candidateUsers="${document:current().header.user.email}"/>

<userTask id="theTask" name="Important task" camunda:dueDate="${dateTime().plusDays(3).toDate()}"/>

Camunda Documentation Reference

Deployments

The BPMN files can be deployed with your custom application as resources or directly in the Processes Page, the files are versioned and every time a new version is deployed the old one is deactivated and the new becomes active. All new process created, automatically uses the active BPMN. The proccesses created before deploying a new version will remain within the version it's created.

Modeling

Camunda Modeler

The BPMN file can be created, modeled and configured by a Web Modeler in your Custom Root Process Admin Page. In the Process Listing Page a process can be viewed and/or edited too.

Another option is the Camunda Modeler, a desktop application that can be downloaded here.

BPMN File (Process Definition)

A process definition defines the structure of a process. You could say that the process definition is the process. Camunda BPM uses BPMN 2.0 as its primary modeling language for modeling process definitions. Each BPMN file is associated with a Document Type, a process doesn't exist without a Document of configured Document Type.

Camunda BPMN Documentation Reference

Extensions

- DocumentTypeName (required): Name of the Document Type associated

Execution Listener

Execution listeners allow you to execute external Java code or evaluate an expression when certain events occur during process execution. The events that can be captured are:

- Start and end of a process instance.

- Taking a transition.

- Start and end of an activity.

- Start and end of a gateway.

- Start and end of intermediate events.

- Ending a start event or starting an end event.

The following process definition contains 3 execution listeners:

<process id="executionListenersProcess">

<extensionElements>

<camunda:executionListener

event="start"

class="org.camunda.bpm.examples.bpmn.executionlistener.ExampleExecutionListenerOne" />

</extensionElements>

<startEvent id="theStart" />

<sequenceFlow sourceRef="theStart" targetRef="firstTask" />

<userTask id="firstTask" />

<sequenceFlow sourceRef="firstTask" targetRef="secondTask">

<extensionElements>

<camunda:executionListener>

<camunda:script scriptFormat="groovy">

println execution.eventName

</camunda:script>

</camunda:executionListener>

</extensionElements>

</sequenceFlow>

<userTask id="secondTask">

<extensionElements>

<camunda:executionListener expression="${myPojo.myMethod(execution.eventName)}" event="end" />

</extensionElements>

</userTask>

<sequenceFlow sourceRef="secondTask" targetRef="thirdTask" />

<userTask id="thirdTask" />

<sequenceFlow sourceRef="thirdTask" targetRef="theEnd" />

<endEvent id="theEnd" />

</process>

The first execution listener is notified when the process starts. The listener is an external Java-class (like ExampleExecutionListenerOne) and should implement the br.com.me.ceap.dynamic.extension.process.ExecutionListener interface. When the event occurs (in this case end event) the method notify(DelegateExecution execution) is called.

public class ExampleExecutionListenerOne implements ExecutionListener {

@Override

public void notify(ProcessExecution execution) {

System.out.print(execution.getEventName());

}

}

The second execution listener is called when the transition is taken. Note that the listener element doesn’t define an event, since only take events are fired on transitions. Values in the event attribute are ignored when a listener is defined on a transition. Also it contains a camunda:script child element which defines a script which will be executed as execution listener.

The last execution listener is called when activity secondTask ends. Instead of using the class on the listener declaration, a expression is defined instead which is evaluated/invoked when the event is fired.

As with other expressions, execution variables are resolved and can be used. Because the execution implementation object has a property that exposes the event name, it’s possible to pass the event-name to your methods using execution.eventName.

Execution listeners also support using a delegateExpression, similar to a service task. This class should implement br.com.me.ceap.dynamic.extension.process.ExecutionListener as well.

<camunda:executionListener event="start" delegateExpression="${myExecutionListenerBean}" />

Utilities Tasks

There are some existent Execution Listeners implementations such as:

br.com.me.ceap.process.impl.service.MacroTaskHandler: Executes a macro through the follow properties set in task extensions:macro: Macro name.macroUser (optional): E-mail for human being's macro.

Tasks

Camunda Documentation reference.

Task Markers

Loop

A loop task repeats until a defined condition either applies or ceases to apply. Perhaps we suggest various dishes to our dinner guests until everyone agrees. Then, we can prepare the meal:

In the example, we executed the task first and checked afterwards to see if we needed it to execute again. Programmers know the principle as the "do-while" construct. We can also apply a "while-do" construct, however, and so check for a condition before the task instead of afterward. This occurs rarely, but it makes sense if the task may not execute at all.

You can attach the condition on which a loop task executes for the first time or, as shown in the example, apply the condition on repeated executions as an annotation to the task. You can store this condition as an attribute in a formal language of your BPMN tool as well. That makes sense if the process is to be executed by a process engine.

Multiple Instance

A multi-instance activity is a way of defining repetition for a certain step in a business process. In programming concepts, a multi-instance matches the for each construct: it allows execution of a certain step or even a complete subprocess for each item in a given collection, sequentially or in parallel.

A multi-instance is a regular activity that has extra properties defined (so-called multi-instance characteristics) which will cause the activity to be executed multiple times at runtime. Following activities can become a multi-instance activity:

- Service Task

- Send Task

- User Task

- Business Rule Task

- Script Task

- Receive Task

- Manual Task

- (Embedded) Sub-Process

- Call Activity

- Transaction Subprocess

A Gateway or Event can not become multi-instance.

If an activity is multi-instance, this is indicated by three short lines at the bottom of that activity. Three vertical lines indicates that the instances will be executed in parallel, while three horizontal lines indicate sequential execution.

As required by the spec, each parent execution of the created executions for each instance will have following variables:

- nrOfInstances: the total number of instances

- nrOfActiveInstances: the number of currently active, i.e. not yet finished, instances. For a sequential multi-instance, this will always be 1

- nrOfCompletedInstances: the number of already completed instances

These values can be retrieved by calling the execution.getVariable(x) method.

Additionally, each of the created executions will have an execution-local variable (i.e. not visible for the other executions, and not stored on process instance level) :

- loopCounter: indicates the index in the for each loop of that particular instance

To make an activity multi-instance, the activity xml element must have a multiInstanceLoopCharacteristics child element.

<multiInstanceLoopCharacteristics isSequential="false|true">

...

</multiInstanceLoopCharacteristics>

The isSequential attribute indicates if the instances of that activity are executed sequentially or parallel.

The number of instances are calculated once, when entering the activity. There are a few ways of configuring this. On way is directly specifying a number by using the loopCardinality child element.

Expressions that resolve to a positive number are also possible:

<multiInstanceLoopCharacteristics isSequential="false|true">

<loopCardinality>${nrOfOrders-nrOfCancellations}</loopCardinality>

</multiInstanceLoopCharacteristics>

Another way to define the number of instances is to specify the name of a process variable which is a collection using the loopDataInputRef child element. For each item in the collection, an instance will be created. Optionally, it is possible to set that specific item of the collection for the instance using the inputDataItem child element. This is shown in the following XML example:

<userTask id="miTasks" name="My Task ${loopCounter}" camunda:assignee="${assignee}">

<multiInstanceLoopCharacteristics isSequential="false">

<loopDataInputRef>assigneeList</loopDataInputRef>

<inputDataItem name="assignee" />

</multiInstanceLoopCharacteristics>

</userTask>

Suppose the variable assigneeList contains the values [kermit, gonzo, foziee]. In the snippet above, three user tasks will be created in parallel. Each of the executions will have a process variable named assignee containing one value of the collection, which is used to assign the user task in this example.

The downside of the loopDataInputRef and inputDataItem is that 1) the names are pretty hard to remember and 2) due to the BPMN 2.0 schema restrictions they can’t contain expressions. We solve this by offering the collection and elementVariable attributes on the multiInstanceCharacteristics:

<userTask id="miTasks" name="My Task" camunda:assignee="${assignee}">

<multiInstanceLoopCharacteristics isSequential="true"

camunda:collection="${myService.resolveUsersForTask()}" camunda:elementVariable="assignee" >

</multiInstanceLoopCharacteristics>

</userTask>

A multi-instance activity ends when all instances are finished. However, it is possible to specify an expression that is evaluated every time one instance ends. When this expression evaluates to true, all remaining instances are destroyed and the multi-instance activity ends, continuing the process. Such an expression must be defined in the completionCondition child element.

<userTask id="miTasks" name="My Task" camunda:assignee="${assignee}">

<multiInstanceLoopCharacteristics isSequential="false"

camunda:collection="assigneeList" camunda:elementVariable="assignee" >

<completionCondition>${nrOfCompletedInstances/nrOfInstances >= 0.6 }</completionCondition>

</multiInstanceLoopCharacteristics>

</userTask>

In this example, parallel instances will be created for each element of the assigneeList collection. However, when 60% of the tasks are completed, the other tasks are deleted and the process continues.

Camunda Multiple Instances Documentation reference.

Boundary Events and Multi-Instance

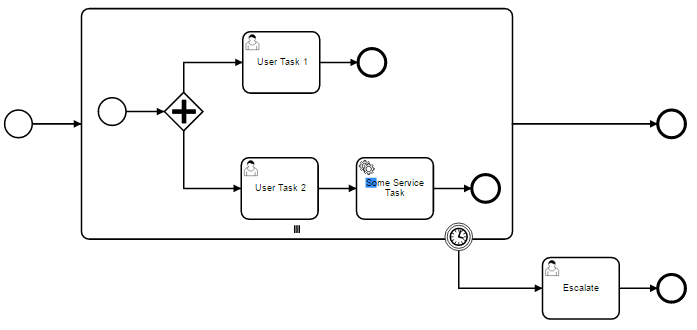

Since a multi-instance is a regular activity, it is possible to define a boundary event on its boundary. In case of an interrupting boundary event, when the event is caught, all instances that are still active will be destroyed. For example, take the following multi-instance subprocess:

Here all instances of the subprocess will be destroyed when the timer fires, regardless of how many instances there are or which inner activities are currently not completed yet.

Loops

The loop marker is not natively supported yet by the engine. For Multiple Instance the number of repetitions is known in advance - which makes it a bad candidate for loops (anyway - as it defines a completion condition that may already be sufficient in some cases).

To get around this limitation the solution is to explicitly model the loop in your BPMN process:

Be assured that we have the loop marker in our backlog to be added to the engine.

Messages

When a task has a boundary message event in it and it is multiple instance, a message will be created for each instance. To find the message you want you can use a any variable for that instance:

UserProcess userProcess = processService.getProcess("multipleMessage");

UserProcessInstance userProcessInstance = processService.startProcess(userProcess, metaDocument);

Map<String, Object> variables = new HashMap<>();

variables.put("loopCounter", 1);

String executionId = processService.getExecutionIdByProcessInstanceAndMessage(userProcessInstance.getProcessInstanceId(), "message", variables);

processService.sendMessage(executionId, "message", new HashMap<>());

User Task

A user task is used to model work that needs to be done by a human actor. When the process execution arrives at such a user task, a new task is created in the task list of the user(s) or group(s) assigned to that task but only one user can complete it.

A user task is defined in XML as follows. The id attribute is required while the name attribute is optional.

<userTask id="theTask" name="Important task" />

Description

A user task can have also a description. In fact, any BPMN 2.0 element can have a description. A description is defined by adding the documentation element.

<userTask id="theTask" name="Schedule meeting" >

<documentation>

Schedule an engineering meeting for next week with the new hire.

</documentation>

</userTask>

Task Listeners

A task listener is used to execute custom Java logic or an expression upon the occurrence of a certain task-related event. It can only be added in the process definition as a child element of a user task. Note that this also must happen as a child of the BPMN 2.0 extensionElements and in the Camunda namespace, since a task listener is a construct specifically for the Camunda engine.

<userTask id="myTask" name="My Task" >

<extensionElements>

<camunda:taskListener event="create" class="meceap.process.TestTaskListener" />

</extensionElements>

</userTask>

A task listener supports following attributes:

-

event (required): the type of task event on which the task listener will be invoked. Possible events are:

- create: occurs when the task has been created and all task properties are set.

- assignment (not supported): occurs when the task is assigned to somebody.

- complete: occurs when the task is completed and just before the task is deleted from the runtime data.

- delete: occurs just before the task is deleted from the runtime data.

-

class: the delegation class that must be called. This class must implement the

br.com.me.ceap.dynamic.extension.process.TaskListenerinterface.

public class TestTaskListener implements TaskListener {

@Override

public void notifyTask(ProcessExecution execution) {

// Custom logic goes here

}

}

- expression: (cannot be used together with the class attribute) specifies an expression that will be executed when the event happens. It is possible to pass the name of the event (using task.eventName) as parameter to the called object.

<camunda:taskListener event="create" expression="${myObject.callMethod(task.eventName)}" />

- delegateExpression: allows to specify an expression that resolves to an object implementing the

br.com.me.ceap.dynamic.extension.process.TaskListenerinterface, similar to a service task.

<camunda:taskListener event="create" delegateExpression="${testTaskListener}" />

Besides the class, expression and delegateExpression attribute a camunda:script child element can be used to specify a script as task listener. Also an external script resource can be declared with the resource attribute of the camunda:script element. The using is not recommended.

<userTask id="task">

<extensionElements>

<camunda:taskListener event="create">

<camunda:script scriptFormat="groovy">

println task.eventName

</camunda:script>

</camunda:taskListener>

</extensionElements>

</userTask>

User Assignment

A user task can be assigned to many users through a list of e-mail users, group or an ActorResolver.

Candidate Users

candidateUsers attribute: this custom extension allows you to make a user a candidate for a task.

<userTask id="theTask" name="my task" camunda:candidateUsers="bruno.lopes@me.com.br, marcelo.beccari@me.com.br" />

Candidate Groups

candidateGroups attribute: this custom extension allows you to make a group a candidate for a task.

<userTask id="theTask" name="my task" camunda:candidateGroups="Management, accountancy" />

Actor Resolver

actorResolver: this custom property allows you to set a class to resolve the users and/or groups to be candidates of a task.

<userTask id="theTask" name="my task">

<extensionElements>

<camunda:properties>

<camunda:property name="actorResolver" value="meceap.process.TestActorResolver" />

</camunda:properties>

</extensionElements>

</userTask>

The class must implements br.com.me.ceap.dynamic.extension.process.ActorResolver and can be a Spring bean.

@Named

public class TestActorResolver implements ActorResolver {

@Inject

private HumanBeingService humanBeingService;

@Override

public List<ActorResolverResult> getActors(ProcessExecution execution) {

ActorResolverResult actorResolverResult = new ActorResolverResult();

actorResolverResult.setUserHumanBeing(humanBeingService.getByEmail("bruno.lopes@me.com.br"));

return Arrays.asList(actorResolverResult);

}

}

Task Handler

taskHandler: this custom property allows you to set a class to resolve the page the user will see when try to see the task.

<userTask id="theTask" name="my task">

<extensionElements>

<camunda:properties>

<camunda:property name="taskHandler" value="meceap.process.TestTaskHandler" />

</camunda:properties>

<extensionElements>

</userTask>

The class must implements br.com.me.ceap.web.extension.handler.UserTaskHandler and can be a Spring bean.

@Named

public class TestTaskHandler implements UserTaskHandler {

@Override

public ModelAndView handleTask(ProcessExecution execution) {

UserPrincipal principal = new UserPrincipal();

return new ModelAndView(new RedirectView(String.format("/%s/do/document/show/%s",

principal.getCustomRoot().getName(), execution.getMetaDocument().getId())));

}

}

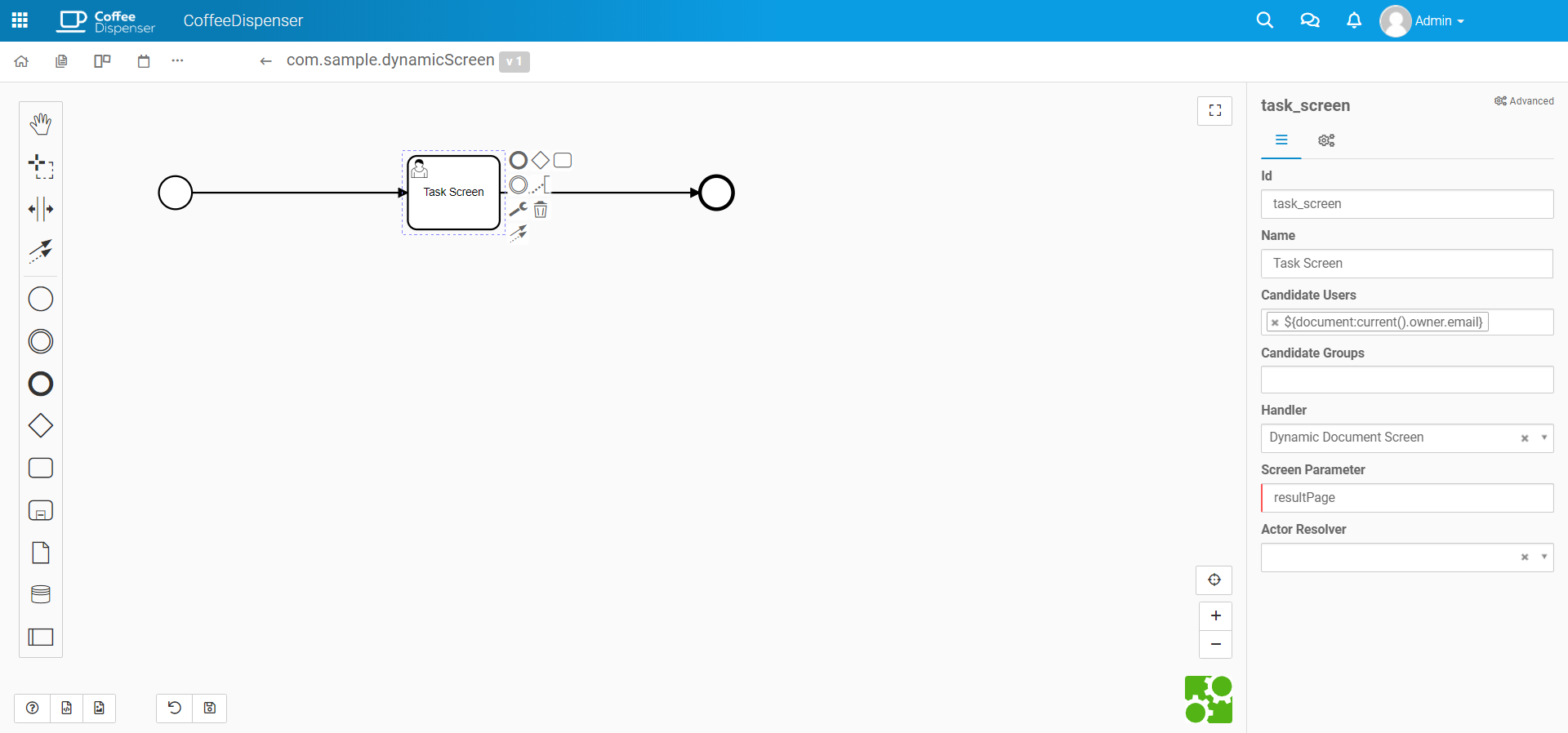

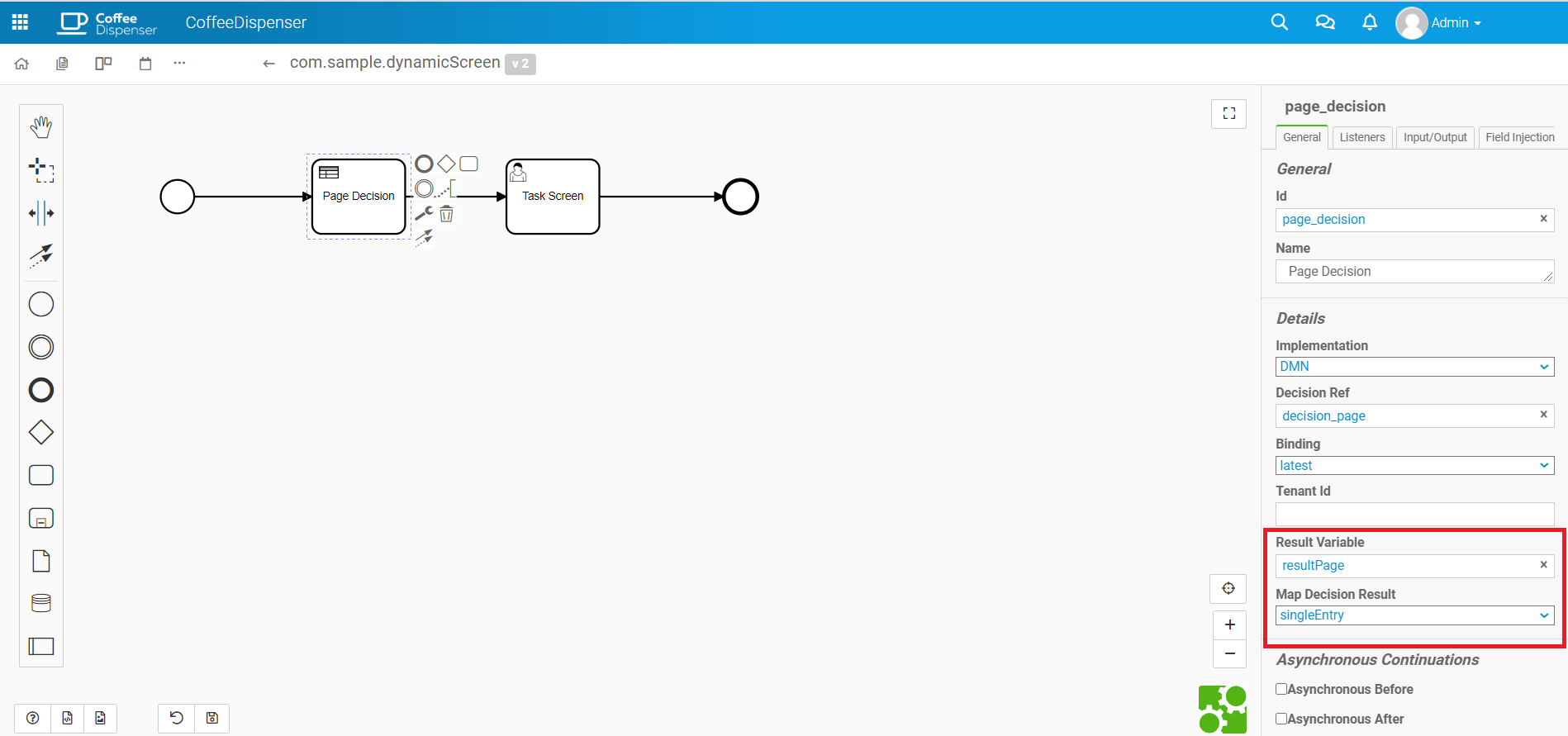

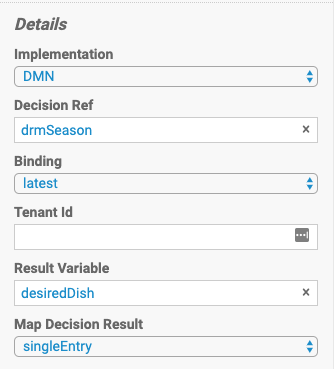

Dynamic Document Screen

A built-in task handler that evaluates the screen name from the parameter configured in the component.

- Screen Parameter: a process variable containing the name of the screen that will be used when accessing the task.

Using with DMN

This task handler can be configured to process the output of a decision table and redirect to a specific screen if the output value is a screen name.

The output name must be the same as the value configured in the task handler Screen Parameter field. Furthermore, the output result type has to be the "singleEntry" option, otherwise a error will be displayed.

Fallback Screen

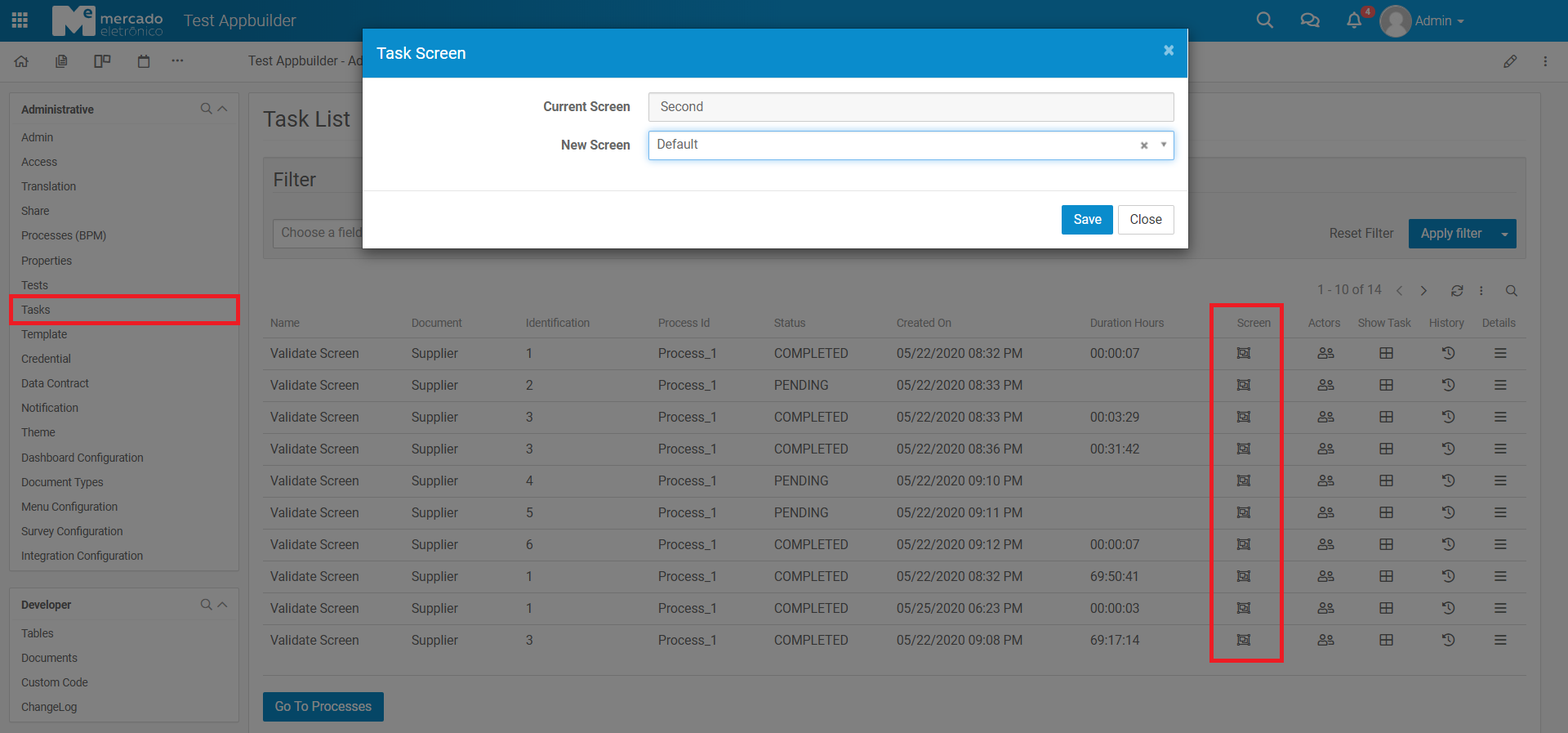

A option in task list of Task menu used to define a new screen for the pending tasks.

- Current Screen: the current screen the task is trying to use. The value is loaded if the handler is a Document Screen or a Dynamic Document Screen, otherwise, or if the task isn't pending, the value will be empty.

- New Screen: the new screen to use when accessing the task. It will be used whenever the task is accessed, regardless of the bpm component settings.

Task Notify

taskNotify: this custom property allows you to set if the candidate users will be notified about the new task, the default is true.

<userTask id="theTask" name="my task">

<extensionElements>

<camunda:properties>

<camunda:property name="taskNotify" value="false" />

</camunda:properties>

</extensionElements>

</userTask>

Utilities Tasks

There are some existent User Task implementations such as:

br.com.me.ceap.process.impl.task.ScreenUserTaskHandler: Redirect the user to an specific Screen through the follow properties set in task extensions:screen: Screen name

Limitations

The other properties specified in Camunda User Task Documentation such as Forms and Assignment using BPMN Resource Assignments are not supported.

Service Task

A service task is used to invoke services. In Camunda this is done by calling Java code or providing a work item for an external worker to complete asynchronously.

Calling Java Code

There are 4 ways of declaring how to invoke Java logic:

- Specifying a class that implements

br.com.me.ceap.dynamic.extension.process.TaskHandler - Evaluating an expression that resolves to a delegation object

- Invoking a method expression

- Evaluating a value expression

To specify a class that is called during process execution, the fully qualified classname needs to be provided by the camunda:class attribute.

<serviceTask id="javaService"

name="My Java Service Task"

camunda:class="org.camunda.bpm.MyTaskHandler" />

And the class would be:

public class MyTaskHandler implements TaskHandler {

@Override

public void execute(ProcessExecution execution) {

// custom code here

}

}

It is also possible to use an expression that resolves to an object. This object must follow the same rules as objects that are created when the camunda:class attribute is used.

<serviceTask id="beanService"

name="My Bean Service Task"

camunda:delegateExpression="${myDelegateBean}" />

Or an expression which calls a method or resolves to a value.

<serviceTask id="expressionService"

name="My Expression Service Task"

camunda:expression="${myBean.doWork()}" />

Service Task Results

The return value of a service execution (for a service task exclusively using expression) can be assigned to an already existing or to a new process variable by specifying the process variable name as a literal value for the camunda:resultVariable attribute of a service task definition. Any existing value for a specific process variable will be overwritten by the result value of the service execution. When not specifying a result variable name, the service execution result value is ignored.

<serviceTask id="aMethodExpressionServiceTask"

camunda:expression="#{myService.doSomething()}"

camunda:resultVariable="myVar" />

In the example above, the result of the service execution (the return value of the doSomething() method invocation on object myService) is set to the process variable named myVar after the service execution completes.

RESULT VARIABLES AND MULTI-INSTANCE Note that when you use camunda:resultVariable in a multi-instance construct, for example in a multi-instance subprocess, the result variable is overwritten every time the task completes, which may appear as random behavior. See camunda:resultVariable for details.

Utilities Tasks

There are some existent Service Tasks implementations such as:

br.com.me.ceap.process.impl.service.MacroTaskHandler: Executes a macro through the follow properties set in task extensions:macro: Macro name.macroUser (optional): E-mail for human being's macro.

Limitations

The other properties specified in Camunda Service Task Documentation such as External Tasks are not supported.

Script Task

A script task is an automated activity. When a process execution arrives at the script task, the corresponding script is executed.

Although the system supports it, its use is not recommended, instead use Service Task.

Camunda Script Task Documentation

Business Rule Task

A Business Rule task is used to synchronously execute one or more rules.

You can use the Camunda DMN engine integration to evaluate a DMN decision. You have to specify the decision key to evaluate as the camunda:decisionRef attribute. Additionally, the camunda:decisionRefBinding specifies which version of the decision should be evaluated.

A typical configuration to use DMN is as follow:

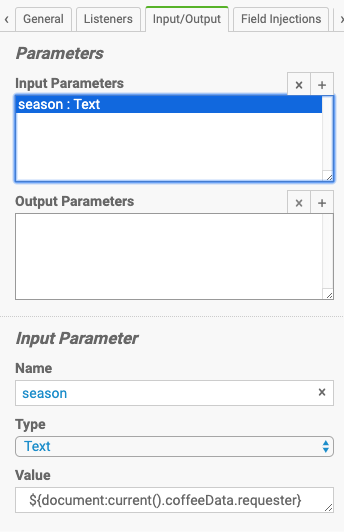

You have to configure Input Variables too, camunda expressions can be used, for example if you want to pass document field values to DMN. Output variables are optional, you can configure them if you have more than one output variables in DMN but even in this case is optional because you can change the Map Decision Result to a ResultList or CollectEntries.

Camunda Business Rule Task Documentation

Manual Task

A Manual Task defines a task that is external to the BPM engine. It is used to model work that is done by somebody who the engine does not need to know of and is there no known system or UI interface. For the engine, a manual task is handled as a pass-through activity, automatically continuing the process at the moment the process execution arrives at it.

Camunda Manual Task Documentation

Receive Task

A Receive Task is a simple task that waits for the arrival of a certain message. When the process execution arrives at a Receive Task, the process state is committed to the persistence storage. This means that the process will stay in this wait state until a specific message is received by the engine, which triggers the continuation of the process beyond the Receive Task.

A Receive Task with a message reference can be triggered like an ordinary event:

<definitions ...>

<message id="newInvoice" name="newInvoiceMessage"/>

<process ...>

<receiveTask id="waitState" name="wait" messageRef="newInvoice">

...

You can explicitly query for the subscription and trigger it:

String executionId = processService.getExecutionIdByProcessInstanceAndMessage(processInstanceId, "newInvoiceMessage", new HashMap<String, Object>());

processService.sendMessage(executionId, "newInvoiceMessage", new HashMap<String, Object>());

Limitations

The other properties specified in Camunda Receive Task Documentation such as External Tasks are not supported.

Send Task

A send task is used to send a message. In Camunda this is done by calling Java code.

The send task has the same behavior as a service task.

<sendTask id="sendTask" camunda:class="org.camunda.bpm.MySendTaskHandler" />

Camunda Send Task Documentation

Sub Processes

Embedded Subprocess

A Subprocess is an activity that contains other activities, gateways, events, etc. which itself forms a process that is part of the bigger process. A Subprocess is completely defined inside a parent process (that’s why it’s often called an embedded Subprocess).

Subprocesses have two major use cases:

- Subprocesses allow hierarchical modeling. Many modeling tools allow that subprocesses can be collapsed, hiding all the details of the subprocess and displaying a high-level end-to-end overview of the business process.

- A subprocess creates a new scope for events. Events that are thrown during execution of the subprocess can be caught by a boundary event on the boundary of the subprocess, thus creating a scope for that event, limited to the subprocess.

Using a subprocess does impose some constraints:

- A subprocess can only have one none start event, no other start event types are allowed. A subprocess must have at least one end event. Note that the BPMN 2.0 specification allows to omit the start and end events in a subprocess, but the current engine implementation does not support this.

- Sequence flows can not cross subprocess boundaries.

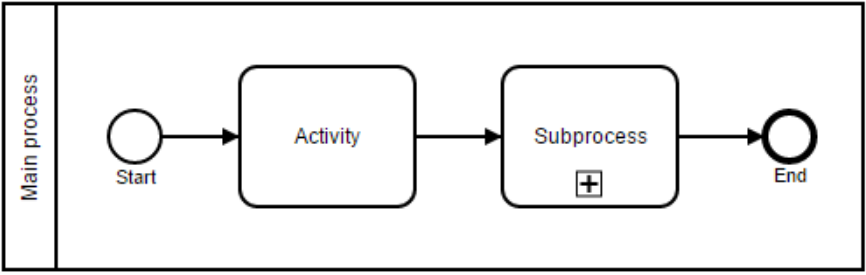

A subprocess is visualized as a typical activity, i.e., a rounded rectangle. In case the subprocess is collapsed, only the name and a plus-sign are displayed, giving a high-level overview of the process:

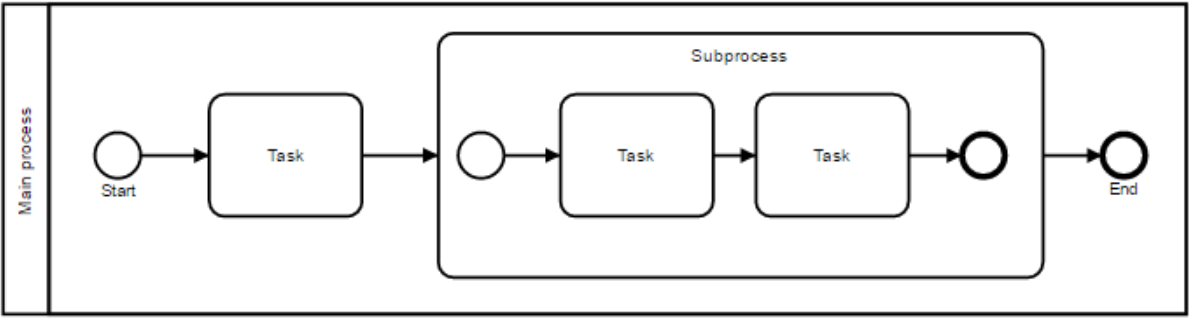

In case the subprocess is expanded, the steps of the subprocess are displayed within the subprocess boundaries:

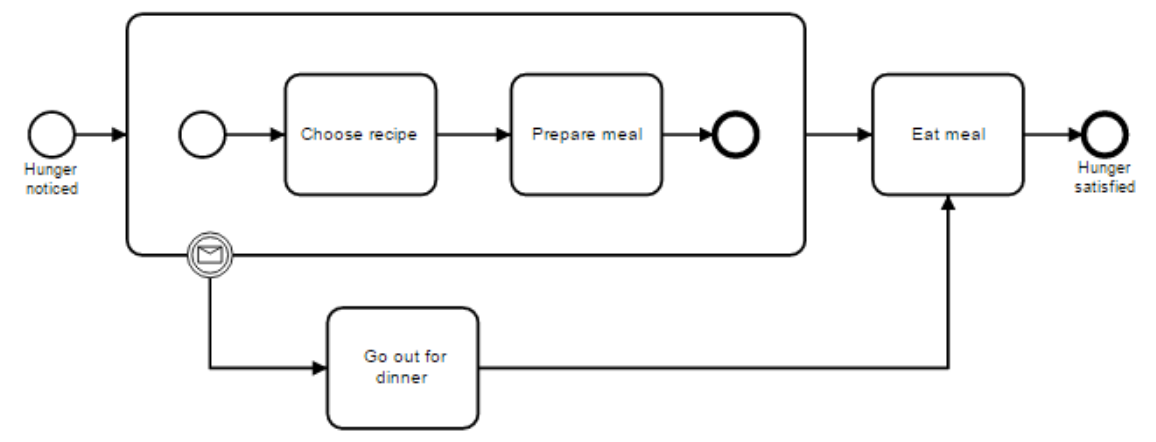

One of the main reasons to use a subprocess is to define a scope for a certain event. The following process model shows this: both the investigate software and investigate hardware tasks need to be done in parallel, but both tasks need to be done within a certain time, before Level 2 support is consulted. Here, the scope of the timer (i.e., which activities must be done in time) is constrained by the subprocess.

A subprocess is defined by the subprocess element. All activities, gateways, events, etc. that are part of the subprocess, need to be enclosed within this element.

<startEvent id="outerStartEvent" />

<!-- ... other elements ... -->

<subProcess id="subProcess">

<startEvent id="subProcessStart" />

<!-- ... other subprocess elements ... -->

<endEvent id="subProcessEnd" />

</subProcess>

Camunda Embedded Subprocess Documentation

Call Activity

BPMN 2.0 makes a distinction between an embedded subprocess and a call activity. From a conceptual point of view, both will call a subprocess when process execution arrives at the activity.

The difference is that the call activity references a process that is external to the process definition, whereas the subprocess is embedded within the original process definition. The main use case for the call activity is to have a reusable process definition that can be called from multiple other process definitions. Although not yet part of the BPMN specification, it is also possible to call a CMMN case definition.

When process execution arrives at the call activity, a new process instance is created, which is used to execute the subprocess, potentially creating parallel child executions as within a regular process. The main process instance waits until the subprocess is completely ended, and continues the original process afterwards.

A call activity is visualized the same way as a collapsed embedded subprocess, however with a thick border. Depending on the modeling tool, a call activity can also be expanded, but the default visualization is the collapsed representation.

A call activity is a regular activity that requires a calledElement which references a process definition by its key. In practice, this means that the id of the process is used in the calledElement:

<callActivity id="callCheckCreditProcess" name="Check credit" calledElement="checkCreditProcess" />

Note that the process definition of the subprocess is resolved at runtime. This means that the subprocess can be deployed independently from the calling process, if needed.

CalledElement Binding

In a call activity the calledElement attribute contains the process definition key as reference to the subprocess. This means that the latest process definition version of the subprocess is always called. To call another version of the subprocess it is possible to define the attributes calledElementBinding and calledElementVersion in the call activity. Both attributes are optional.

CalledElementBinding has three different values:

- latest: always call the latest active process definition version (which is also the default behaviour if the attribute isn’t defined)

- deployment: if called process definition is part of the same deployment as the calling process definition, use the version from deployment

- version: call a fixed version of the process definition, in this case calledElementVersion is required. The version number can either be specified in the BPMN XML or returned by an expression (

${versionToCall})

<callActivity id="callSubProcess" calledElement="checkCreditProcess"

camunda:calledElementBinding="latest|deployment|version"

camunda:calledElementVersion="17">

</callActivity>

Passing Variables

You can pass process variables to the subprocess and vice versa. The data is copied into the subprocess when it is started and copied back into the main process when it ends.

<callActivity id="callSubProcess" calledElement="checkCreditProcess" >

<extensionElements>

<camunda:in source="someVariableInMainProcess" target="nameOfVariableInSubProcess" />

<camunda:out source="someVariableInSubProcss" target="nameOfVariableInMainProcess" />

</extensionElements>

</callActivity>

By default, variables declared in out elements are set in the highest possible variable scope.

Furthermore, you can configure the call activity so that all process variables are passed to the subprocess and vice versa. The process variables have the same name in the main process as in the subprocess.

<callActivity id="callSubProcess" calledElement="checkCreditProcess" >

<extensionElements>

<camunda:in variables="all" />

<camunda:out variables="all" />

</extensionElements>

</callActivity>

It is possible to use expressions here as well:

<callActivity id="callSubProcess" calledElement="checkCreditProcess" >

<extensionElements>

<camunda:in sourceExpression="${x+5}" target="y" />

<camunda:out sourceExpression="${y+5}" target="z" />

</extensionElements>

</callActivity>

So in the end z = y+5 = x+5+5 holds.

Source expressions are evaluated in the context of the called process instance.

Combination with Input/Output parameters

Call activities can be combined with Input/Output parameters as well. This allows for an even more flexible mapping of variables into the called process. In order to only map variables that are declared in the inputOutput mapping, the attribute local can be used. Consider the following XML:

<callActivity id="callSubProcess" calledElement="checkCreditProcess" >

<extensionElements>

<!-- Input/Output parameters -->

<camunda:inputOutput>

<camunda:inputParameter name="var1">

<camunda:script scriptFormat="groovy">

<![CDATA[

sum = a + b + c

]]>

</camunda:script>

</camunda:inputParameter>

<camunda:inputParameter name="var2"></camunda:inputParameter>

</camunda:inputOutput>

<!-- Mapping to called instance -->

<camunda:in variables="all" local="true" />

</extensionElements>

</callActivity>

Setting local="true" means that all local variables of the execution executing the call activity are mapped into the called process instance. These are exactly the variables that are declared as input parameters.

The same can be done with output parameters:

<callActivity id="callSubProcess" calledElement="checkCreditProcess" >

<extensionElements>

<!-- Input/Output parameters -->

<camunda:inputOutput>

<camunda:outputParameter name="var1">

<camunda:script scriptFormat="groovy">

<![CDATA[

sum = a + b + c

]]>

</camunda:script>

</camunda:outputParameter>

<camunda:outputParameter name="var2"></camunda:outputParameter>

</camunda:inputOutput>

<!-- Mapping from called instance -->

<camunda:out variables="all" local="true" />

</extensionElements>

</callActivity>

When the called process instance ends, due to local="true" in the camunda:out parameter all variables are mapped to local variables of the execution executing the call activity. These variables can be mapped to process instance variables by using an output mapping. Any variable that is not declared by a camunda:outputParameter element will not be available anymore after the call activity ends.

Limitations

The other properties specified in Camunda Call Activity Documentation such as Case Instance are not supported.

Transaction Subprocess

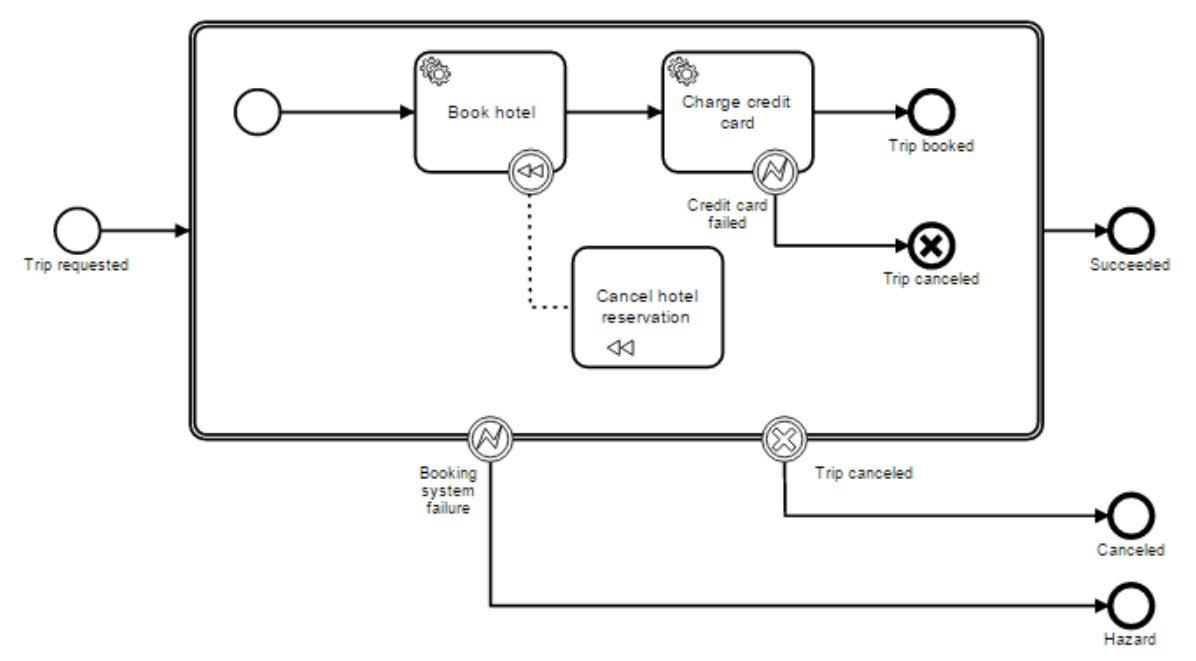

A transaction subprocess is an embedded subprocess which can be used to group multiple activities to a transaction. A transaction is a logical unit of work which allows grouping of a set of individual activities, so that they either succeed or fail collectively.

A transaction can have three different outcomes, with these three possible outcomes:

-

A transaction is successful if it is neither canceled not terminated by a hazard. If a transaction subprocess is successful, it is left using the outgoing sequenceflow(s). A successful transaction might be compensated if a compensation event is thrown later in the process.

Note: just as “ordinary” embedded subprocesses, a transaction may be compensated after successful completion using an intermediate throwing compensation event.

-

A transaction is canceled if an execution reaches the cancel end event. In that case all executions are terminated and removed. A single remaining execution is then set to the cancel boundary event, which triggers compensation. After compensation is completed the transaction subprocess is left, using the outgoing sequence flow(s) of the cancel boundary event.

-

A transaction is ended by a hazard if an error event is thrown which is not caught within the scope of the transaction subprocess. (This also applies if the error is caught on the boundary of the transaction subprocess.) In this case, compensation is not performed.

The following diagram illustrates the three different outcomes:

A transaction subprocess is represented in xml using the transaction element:

<transaction id="myTransaction" >

<!-- ... -->

</transaction>

RELATION TO ACID TRANSACTIONS It is important not to confuse the BPMN transaction subprocess with technical (ACID) transactions. The BPMN transaction subprocess is not a way to scope technical transactions.

A BPMN transaction differs from a technical transaction in the following ways:

-

While an ACID transaction is typically short lived, a BPMN transaction may take hours, days or even months to complete. (Consider a case where one of the activities grouped by a transaction is a usertask; typically, people have longer response times than applications. Or, in another situation, a BPMN transaction might wait for some business event to occur, like the fact that a particular order has been fulfilled.) Such operations usually take considerably longer to complete than updating a record in a database or storing a message using a transactional queue.

-

Because it is impossible to scope a technical transaction to the duration of a business activity, a BPMN transaction typically spans multiple ACID transactions.

- Since a BPMN transaction spans multiple ACID transactions, we loose ACID properties. Consider the example given above. Let’s assume the “book hotel” and the “charge credit card” operations are performed in separate ACID transactions. Let’s also assume that the “book hotel” activity is successful. Now we have an intermediate inconsistent state because we have performed a hotel booking but have not yet charged the credit card. Now, in an ACID transaction, we would also perform different operations sequentially and therefore also have an intermediate inconsistent state. What is different here is that the inconsistent state is visible outside of the scope of the transaction. For example, if the reservations are made using an external booking service, other parties using the same booking service might already see that the hotel is booked. This means that when implementing business transactions, we completely lose the isolation property (granted, we usually also relax isolation when working with ACID transactions to allow for higher levels of concurrency, but there we have fine grained control and intermediate inconsistencies are only present for very short periods of times).

- A BPMN business transaction can also not be rolled back in the traditional sense. As it spans multiple ACID transactions, some of these ACID transactions might already be committed at the time the BPMN transaction is canceled. At that point they cannot be rolled back anymore.

Since BPMN transactions are long-running in nature, the lack of isolation and a rollback mechanism needs to be dealt with differently. In practice there is usually no better solution than to deal with these problems in a domain specific way:

- The rollback is performed using compensation. If a cancel event is thrown in the scope of a transaction, the effects of all activities that executed successfully and have a compensation handler are compensated.

- The lack of isolation is also often dealt with by using domain specific solutions. For instance, in the example above, a hotel room might appear to be booked to a second customer before we have actually made sure that the first customer can pay for it. Since this might be undesirable from a business perspective, a booking service might choose to allow for a certain amount of overbooking.

- In addition, since the transaction can be aborted in case of a hazard, the booking service has to deal with the situation where a hotel room is booked but payment is never attempted (since the transaction was aborted). In that case, the booking service might choose a strategy where a hotel room is reserved for a maximum period of time and, if payment is not received until then, the booking is canceled.

To sum it up: while ACID transactions offer a generic solution to such problems (rollback, isolation levels and heuristic outcomes), we need to find domain specific solutions to these problems when implementing business transactions.

CURRENT LIMITATIONS The BPMN specification requires that the process engine reacts to events issued by the underlying transaction protocol and, in case a transaction is canceled, if a cancel event occurs, in the underlying protocol. As an embeddable engine, the Camunda engine currently does not support this. (For some ramifications of this, see the paragraph on consistency below.)

Consistency on top of ACID transactions and optimistic concurrency: A BPMN transaction guarantees consistency in the sense that either all activities compete successfully, or, if some activity cannot be performed, the effects of all other successful activities are compensated. So either way, we end up in a consistent state. However, it is important to recognize that in Camunda BPM, the consistency model for BPMN transactions is superposed on top of the consistency model for process execution. The Camunda engine executes processes in a transactional way. Concurrency is addressed using optimistic locking. In the engine BPMN, error, cancel and compensation events are built on top of the same ACID transactions and optimistic locking. For example, a cancel end event can only trigger compensation if it is actually reached. It is not reached if some undeclared exception is thrown by a service task before. The effects of a compensation handler can not be committed if some other participant in the underlying ACID transaction sets the transaction to the state rollback-only. Also, when two concurrent executions reach a cancel end event, compensation might be triggered twice and fail with an optimistic locking exception. All of this is to say that when implementing BPMN transactions in the core engine, the same set of rules apply as when implementing “ordinary” processes and subprocesses. So, to effectively guarantee consistency, it is important to implement processes in a way that takes the optimistic, transactional execution model into consideration.

Camunda Transaction Subprocess Documentation

Gateways

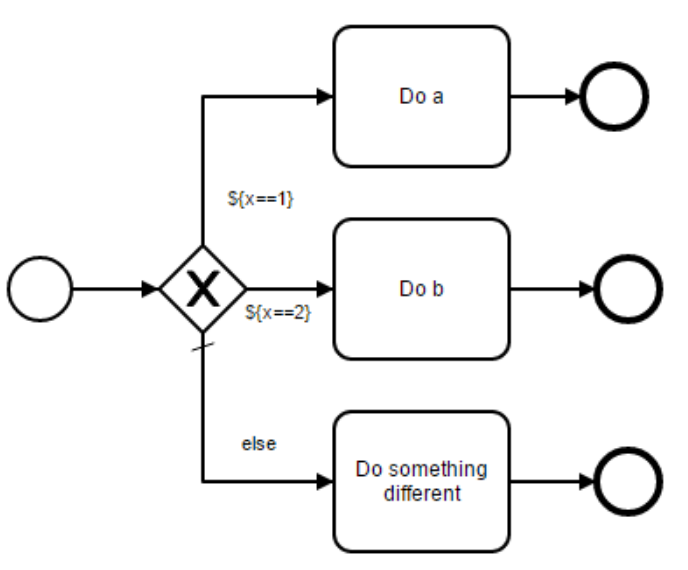

Data-based Exclusive Gateway (XOR)

An exclusive gateway (also called the XOR gateway or, in more technical terms, the exclusive data-based gateway), is used to model a decision in the process. When the execution arrives at this gateway, all outgoing sequence flows are evaluated in the order in which they have been defined. The sequence flow which condition evaluates to ‘true’ (or which doesn’t have a condition set, conceptually having a ‘true’ value defined on the sequence flow) is selected for continuing the process.

Note that only one sequence flow is selected when using the exclusive gateway. In case multiple sequence flow have a condition that evaluates to ‘true’, the first one defined in the XML (and only that one!) is selected for continuing the process.

If no sequence flow can be selected (no condition evaluates to ‘true’) this will result in a runtime exception unless you have a default flow defined. One default flow can be set on the gateway itself in case no other condition matches - like an ‘else’ in programming languages.

Note that a gateway without an icon inside it defaults to an exclusive gateway, even if we recommend to use the X within the gateway if your BPMN tool gives you that option.

The XML representation of an exclusive gateway is straightforward: one line defining the gateway and condition expressions defined on the outgoing sequence flow. The default flow (optional) is set as attribute on the gateway itself. Note that the name of the flow (used in the diagram, meant for the human being) might be different than the formal expression (used in the engine).

<exclusiveGateway id="exclusiveGw" name="Exclusive Gateway" default="flow4" />

<sequenceFlow id="flow2" sourceRef="exclusiveGw" targetRef="theTask1" name="${x==1}">

<conditionExpression xsi:type="tFormalExpression">${x == 1}</conditionExpression>

</sequenceFlow>

<sequenceFlow id="flow3" sourceRef="exclusiveGw" targetRef="theTask2" name="${x==2}">

<conditionExpression xsi:type="tFormalExpression">${x == 2}</conditionExpression>

</sequenceFlow>

<sequenceFlow id="flow4" sourceRef="exclusiveGw" targetRef="theTask3" name="else">

</sequenceFlow>

Camunda Exclusive Gateway Documentation

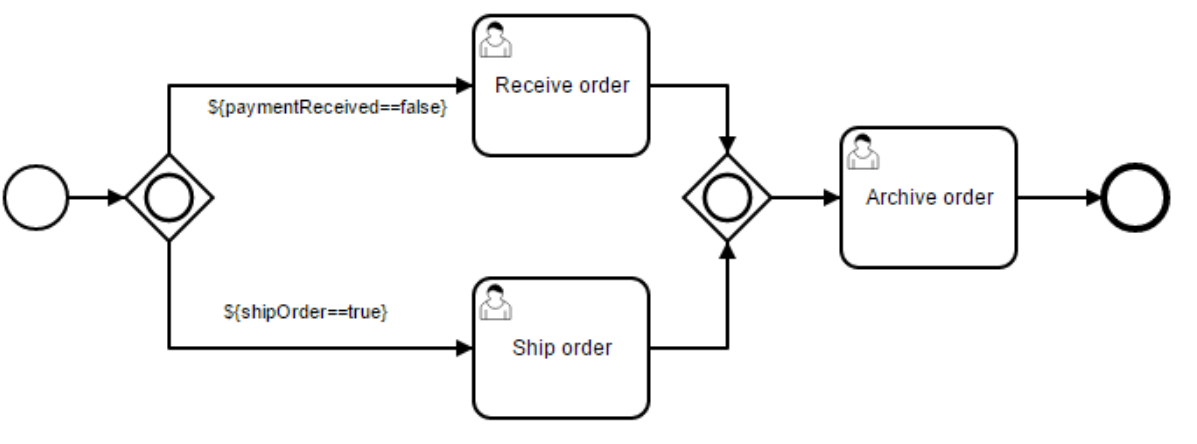

Inclusive Gateway

The Inclusive Gateway can be seen as a combination of an exclusive and a parallel gateway. Like an exclusive gateway you can define conditions on outgoing sequence flows and the inclusive gateway will evaluate them. However, the main difference is that the inclusive gateway can receive more than one sequence flow, like a parallel gateway.

The functionality of the inclusive gateway is based on the incoming and outgoing sequence flow:

- fork: all outgoing sequence flow conditions are evaluated and for the sequence flow conditions that evaluate to ‘true’ the flows are followed in parallel, creating one concurrent execution for each sequence flow.

- join: all concurrent executions arriving at the inclusive gateway wait at the gateway until an execution has arrived for each of the incoming sequence flows that have a process token. This is an important difference to the parallel gateway. So in other words, the inclusive gateway will only wait for the incoming sequence flows that will be executed. After the join, the process continues past the joining inclusive gateway.

Note that an inclusive gateway can have both fork and join behavior, if there are multiple incoming and outgoing sequence flows for the same inclusive gateway. In that case, the gateway will first join all incoming sequence flows that have a process token, before splitting into multiple concurrent paths of executions for the outgoing sequence flows that have a condition that evaluates to ‘true’.

Defining an inclusive gateway needs one line of XML:

<inclusiveGateway id="myInclusiveGateway" />

The actual behavior (fork, join or both), is defined by the sequence flows connected to the inclusive gateway. For example, the model above comes down to the following XML:

<startEvent id="theStart" />

<sequenceFlow id="flow1" sourceRef="theStart" targetRef="fork" />

<inclusiveGateway id="fork" />

<sequenceFlow sourceRef="fork" targetRef="receivePayment" >

<conditionExpression xsi:type="tFormalExpression">${paymentReceived == false}</conditionExpression>

</sequenceFlow>

<sequenceFlow sourceRef="fork" targetRef="shipOrder" >

<conditionExpression xsi:type="tFormalExpression">${shipOrder == true}</conditionExpression>

</sequenceFlow>

<userTask id="receivePayment" name="Receive Payment" />

<sequenceFlow sourceRef="receivePayment" targetRef="join" />

<userTask id="shipOrder" name="Ship Order" />

<sequenceFlow sourceRef="shipOrder" targetRef="join" />

<inclusiveGateway id="join" />

<sequenceFlow sourceRef="join" targetRef="archiveOrder" />

<userTask id="archiveOrder" name="Archive Order" />

<sequenceFlow sourceRef="archiveOrder" targetRef="theEnd" />

<endEvent id="theEnd" />

In the above example, after the process is started, two tasks will be created if the process variables paymentReceived == false and shipOrder == true. In case only one of these process variables equals to true only one task will be created. If no condition evaluates to true an exception is thrown. This can be prevented by specifying a default outgoing sequence flow. In the following example one task will be created, the ship order task:

HashMap<String, Object> variableMap = new HashMap<String, Object>();

variableMap.put("receivedPayment", true);

variableMap.put("shipOrder", true);

UserProcess userProcess = processService.getProcess("forkJoin");

UserProcessInstance userProcessInstance = processService.startProcess(userProcess, metaDocument, variableMap);

List<ProcessTask> tasks = processService.getTasks(metaDocument, ProcessTaskStatus.PENDING);

assertEquals(1, tasks.size());

ProcessTask task = tasks.get(0);

assertEquals("Ship Order", task.getName());

When this task is completed, the second inclusive gateway will join the two executions and since there is only one outgoing sequence flow, no concurrent paths of execution will be created, and only the Archive Order task will be active.

Note that an inclusive gateway does not need to be ‘balanced’ (i.e. a matching number of incoming/outgoing sequence flows for corresponding inclusive gateways). An inclusive gateway will simply wait for all incoming sequence flows and create a concurrent path of execution for each outgoing sequence flow, not influenced by other constructs in the process model.

Camunda Inclusive Gateway Documentation

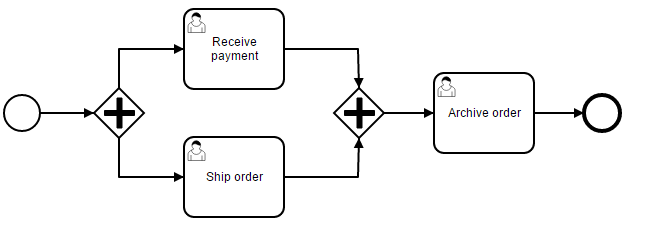

Parallel Gateway

Gateways can also be used to model concurrency in a process. The most straightforward gateway to introduce concurrency in a process model is the Parallel Gateway, which allows forking into multiple paths of execution or joining multiple incoming paths of execution.

The functionality of the parallel gateway is based on the incoming and outgoing sequence flow(s):

- fork: all outgoing sequence flows are followed in parallel, creating one concurrent execution for each sequence flow.

- join: all concurrent executions arriving at the parallel gateway wait at the gateway until an execution has arrived for each of the incoming sequence flows. Then the process continues past the joining gateway.

Note that a parallel gateway can have both fork and join behaviors, if there are multiple incoming and outgoing sequence flows for the same parallel gateway. In that case, the gateway will first join all incoming sequence flows, before splitting into multiple concurrent paths of executions.

An important difference with other gateway types is that the parallel gateway does not evaluate conditions. If conditions are defined on the sequence flow connected with the parallel gateway, they are simply ignored.

Defining a parallel gateway needs one line of XML:

<parallelGateway id="myParallelGateway" />

The actual behavior (fork, join or both), is defined by the sequence flow connected to the parallel gateway.

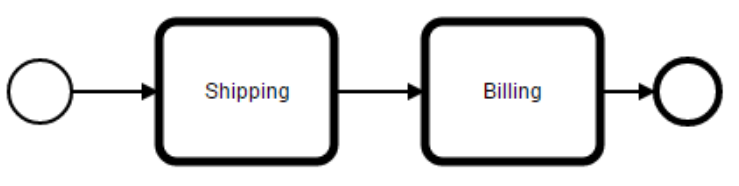

For example, the model above comes down to the following XML:

<startEvent id="theStart" />

<sequenceFlow id="flow1" sourceRef="theStart" targetRef="fork" />

<parallelGateway id="fork" />

<sequenceFlow sourceRef="fork" targetRef="receivePayment" />

<sequenceFlow sourceRef="fork" targetRef="shipOrder" />

<userTask id="receivePayment" name="Receive Payment" />

<sequenceFlow sourceRef="receivePayment" targetRef="join" />

<userTask id="shipOrder" name="Ship Order" />

<sequenceFlow sourceRef="shipOrder" targetRef="join" />

<parallelGateway id="join" />

<sequenceFlow sourceRef="join" targetRef="archiveOrder" />

<userTask id="archiveOrder" name="Archive Order" />

<sequenceFlow sourceRef="archiveOrder" targetRef="theEnd" />

<endEvent id="theEnd" />

In the above example, after the process is started, two tasks will be created:

UserProcess userProcess = processService.getProcess("forkJoin");

processService.startProcess(userProcess, metaDocument);

List<ProcessTask> tasks = processService.getTasks(metaDocument, ProcessTaskStatus.PENDING);

assertEquals(2, tasks.size());

ProcessTask task1 = tasks.get(0);

assertEquals("Receive Payment", task1.getName());

ProcessTask task2 = tasks.get(1);

assertEquals("Ship Order", task2.getName());

When these two tasks are completed the second parallel gateway will join the two executions and, as there is only one outgoing sequence flow, no concurrent paths of execution will be created, and only the Archive Order task will be active.

Note that a parallel gateway does not need to be ‘balanced’ (i.e. a matching number of incoming/outgoing sequence flows for corresponding parallel gateways). A parallel gateway will simply wait for all incoming sequence flows and create a concurrent path of execution for each outgoing sequence flow, not influenced by other constructs in the process model. So, the following process is legal in BPMN 2.0:

Camunda Parallel Gateway Documentation

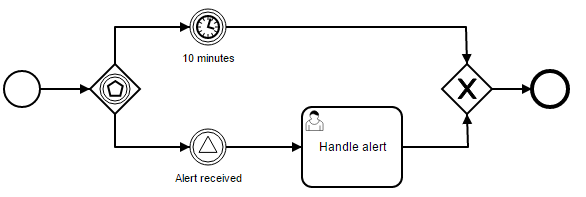

Event-based Gateway

The event-based Gateway allows you to make a decision based on events. Each outgoing sequence flow of the gateway needs to be connected to an intermediate catching event. When process execution reaches an event-based Gateway, the gateway acts like a wait state: execution is suspended. In addition, for each outgoing sequence flow, an event subscription is created.

Note the sequence flows running out of an event-based Gateway are different than ordinary sequence flows. These sequence flows are never actually “executed”. On the contrary, they allow the process engine to determine which events an execution arriving at an event-based Gateway needs to subscribe to. The following restrictions apply:

- An event-based Gateway must have two or more outgoing sequence flows.

- An event-based Gateway may only be connected to elements of the type intermediateCatchEvent. (Receive Tasks after an event-based Gateway are not supported by the engine yet.)

- An intermediateCatchEvent connected to an event-based Gateway must have a single incoming sequence flow.

The following process is an example of a process with an event-based Gateway. When the execution arrives at the event-based Gateway, process execution is suspended. Additionally, the process instance subscribes to the alert signal event and creats a timer which fires after 10 minutes. This effectively causes the process engine to wait for ten minutes for a signal event. If the signal event occurs within 10 minutes the timer is canceled and execution continues after the signal. If the signal is not fired, execution continues after the timer and the signal subscription is canceled.

The corresponding xml looks like this:

<definitions>

<signal id="alertSignal" name="alert" />

<process id="catchSignal">

<startEvent id="start" />

<sequenceFlow sourceRef="start" targetRef="gw1" />

<eventBasedGateway id="gw1" />

<sequenceFlow sourceRef="gw1" targetRef="signalEvent" />

<sequenceFlow sourceRef="gw1" targetRef="timerEvent" />

<intermediateCatchEvent id="signalEvent" name="Alert">

<signalEventDefinition signalRef="alertSignal" />

</intermediateCatchEvent>

<intermediateCatchEvent id="timerEvent" name="Alert">

<timerEventDefinition>

<timeDuration>PT10M</timeDuration>

</timerEventDefinition>

</intermediateCatchEvent>

<sequenceFlow sourceRef="timerEvent" targetRef="exGw1" />

<sequenceFlow sourceRef="signalEvent" targetRef="task" />

<userTask id="task" name="Handle alert"/>

<exclusiveGateway id="exGw1" />

<sequenceFlow sourceRef="task" targetRef="exGw1" />

<sequenceFlow sourceRef="exGw1" targetRef="end" />

<endEvent id="end" />

</process>

</definitions>

Camunda Event-based Gateway Documentation

Events

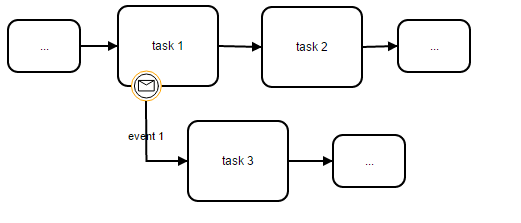

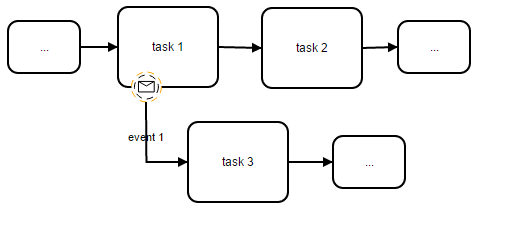

In BPMN there are Start events, Intermediate events, and End events. These three event types can be catching events and/or throwing events. Intermediate events can be used as boundary events on tasks, in which case they can be interrupting or non-interrupting. This gives you a lot of flexibility to use events in your processes.

Basic Concepts

Tasks and gateways are two of three flow elements we've come to know so far: Things (tasks) have to be done under certain circumstances (gateways). What flow element is still missing? The things (events) that are supposed to happen. Events are no less important for BPMN process models than tasks or gateways. We should start with some basic principles for applying them. We already saw Start events, intermediate events, and end events. Those three event types are also catching and/or throwing events:

Catching events are events with a defined trigger. We consider that they take place once the trigger has activated or fired. As an intellectual construct, that is relatively intricate, so we simplify by calling them catching events. The point is that these events influence the course of the process and therefore must be modeled. Catching events may result in:

- The process starting

- The process or a process path continuing

- The task currently processed or the sub-process being canceled

- Another process path being used while a task or a sub-process executes

Throwing events are assumed by BPMN to trigger themselves instead of reacting to a trigger. You could say that they are active compared to passive catching events. We call them throwing events for short, because the process triggers them. Throwing events can be:

- Triggered during the process

- Triggered at the end of the process

We can also model attached intermediate events with BPMN. These do not explicitly require waiting, but they do interrupt our activities, both tasks and sub-processes. Such intermediate events are attached because we position them at the boundary of the activity we want to interrupt.

A token running through the process would behave this way:

- The token moves to task 1, which starts accordingly.

- If event 1 occurs while task 1 is being processed, task 1 is immediately canceled, and the token moves through the exception flow to task 3.

- On the other hand, if event 1 does not occur, task 1 will be processed, and the token moves through the regular sequence flow to task 2.

- If event 1 occurs only after task 1 completes, it will be ignored.